Towards an Equitable

Ecosystem of Artificial Intelligence

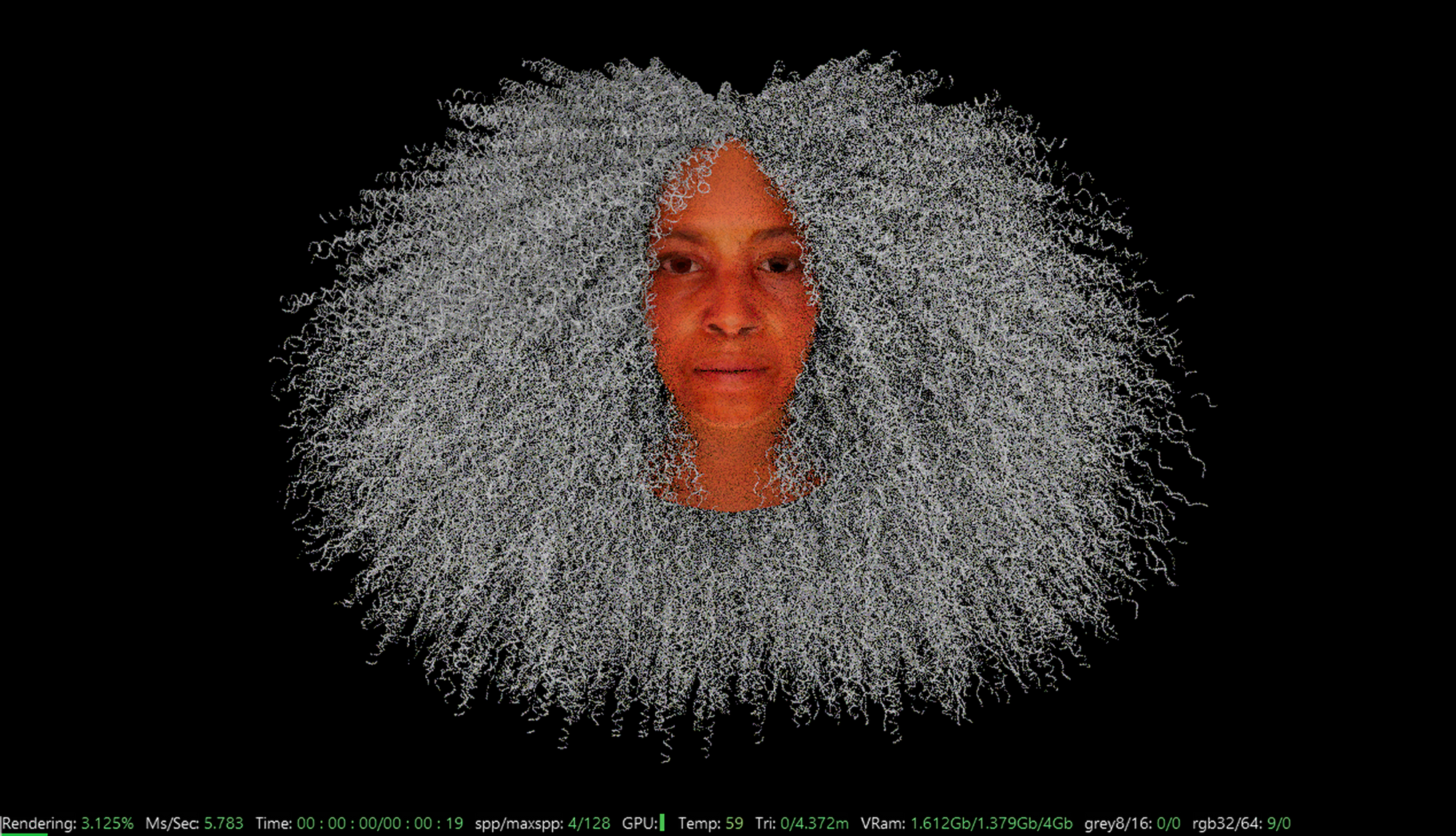

Stephanie Dinkins is a transmedia artist who creates platforms for dialogue about artificial intelligence (AI) as it intersects race, gender, aging, and our future histories. She is particularly driven to work with communities of color in promoting and co-creating more equitability within AI ecosystems. Dinkins exhibits and publicly advocates for equitable AI internationally. As a Technology Resident at Pioneer Works in 2018, Dinkins worked on her project Not the Only One, with tech worker Angie Meitzler, which included the development of an interactive conversational sculpture using deep learning and the multigenerational story of her family as material. The video captures Dinkins work on this project and her work with Bina48 during her residency.

Our Director of Technology, Tommy Martinez, caught up with Dinkins to see what she is working on now and hear her thoughts on building better futures and “NOWS” through an interrogation of Artificial Intelligence systems.

You’ve become a central figure for work that’s being done around exposing bias and inequity in AI systems. Can you tell us a little bit about what problems lie at the intersection of “machine learning” and social architecture? Why should we be thinking about this?

The more I learn about AI and its implimentations, the more concerned I become for BIPOC folks, queer folks, and people with dissabilities who stand to be left behind, at best, or actively vilified, at worst, by these AI ecosystems that are increasingly becoming invisible arbiters of human interaction. We don’t even truly understand how AI architectures make decisions about an unfathomable number of frameworks that influence the prospects of our lives. And, for the most part the creation of these systems is being guided by capitalist profit motives and the very particular ethos of unrepresentative tech enclaves such as Silicon Valley.

Thankfully additional centers of AI development are popping up around the world (but they are still often funded by tech titans like Google and Amazon). It is untenable for small pockets of global society to develop systems that are increasingly orchestrating outcomes for the rest of us. Especially when we are talking about systems that impact finance, medicine, law, immigration, work, social interactions to name just a few touched by AI. Even love and care will be impacted. Bottom line, we all need to be thinking about artificially intelligent ecosystems because they ever increasingly impact the way people live, love, and remember. And because these systems that will touch most of us in some way are being developed by a tiny subsection of society that seems content to develop in ways that maximize profit instead of maximizing potential, well-being, and mutual goals.

Your earlier work such as The Book Bench Project and OneOneFullBasket made use of these simple but very profound activations. The Book Bench Project consisted of a bench placed outside that you used to stage a performance wherein you sat and “read quietly” for prolonged periods of time. That act was used to start a conversation around privilege, access to information and resources. Dialogue is a theme that recurs throughout your work in both high-tech and more immediate ways. Can you talk about what that word, “dialogue,” means to you and for your work?

I believe in a good question and dialogue with others to solution around questions. I believe even more in the creativity of people, especially people who are relegated to the fringes, told they are inferior or incapable of the same things as those who currently hold power. I am also very aware of invisible lines drawn in the sand, both real and imagined, that often serve as barriers to entry to certain fields. I don’t have the answers, so dialogue is a way to start to devise methods of minimizing impediments and allowing our creativity to devise new paths toward outcomes that support and sustain community, starting at home and rippling out to our most remote global brothers and sisters.

Take Book Bench, for example. It was a very local public project I did under the auspices of the Laundromat Project. That project was borne out of observations I made as a new resident of Bedford-Stuyvesant, Brooklyn. The socio-economic reality there was so different from where I grew up in Staten Island a mere twenty-nine miles away. The two places are a part of the same city but supported two very different realities. The resources available to me in Staten Island seemed to outpace resources available to the folks in Bed-Stuy tenfold. I could not coalesce the differences, and hearing my Brooklyn neighbors express that they do not expect much from the powers-that-be hurt me. So, the idea of Book Bench was to make a space to model possibilities and make space for conversations about what we want and can expect to happen in our neighborhood.

AI.Assembly is a direct outpouring of my faith in people’s ability to be creative and find solutions to problems impacting them. [...] We’ve gathered people of color and allies from many walks of life. Artists, engineers, technologists, sociologists, entrepreneurs, students, [and] everyday folks all inhabit the same space and discuss the ever- expanding technological landscape. We try to figure out what we have to offer the technological future and how we can craft technology that serves us in the ways that we want and deserve to be served. The aim is to seed self-sustaining hubs of experimentation, research, thought, and action that challenge the status quo in AI.

I am also activated by the idea of “Afro-now-ism,” an idea that bloomed for me through AI.Assembly. I define Afro-now-ism as a willful practice that imagines the world as one needs it to be to support successful engagement—in the here and now. Instead of waiting to reach the proverbial promised land, also known as a time in the future that may or may not manifest in your lifetime, Afro-now-ism is taking the leap and the risks to imagine and define oneself beyond systemic oppression. It requires conceiving yourself in the space of free and expansive thought and acting from a critically integrated space, instead of from opposition, which often distracts us from more community sustaining work.

Afro-now-ism asks how we liberate our minds from the infinite loop of repression, and oppositional thinking America imposes upon those of us forcibly enjoined to this nation. What incremental changes do we make to our internal algorithms to lurch our way to ever more confident means of thriving in this world? The question is not only what injustices are you fighting against, but what do you in your heart of hearts want to create. This is a pointed question for black folks but includes the rest of society as well. Our fates, whether we like it or not, acknowledge it or not, are intermingled. It depends on the myths we tell about ourselves and each other. Though it is not immediately legible, we sink or swim together.

Apart from creating the space and framework for others to meet and discuss more compassionate approaches to building AI, how is your own work directly engaged with progress in the field?

At this point, my practice is largely about modeling different ways of working with AI technology and imagining how such technologies might be used to bolster community in a variety of environments, from local community centers to academia to large institutions. I often interrogate my decision to accept such opportunities. I wonder if going inside is helpful to my work and the communities I am most concerned with. In the end, I have decided I have a lot to learn from working within institutions for a time, and they have a lot to learn from me. Being a black woman, my very presence often challenges the status quo of such places. The fact that the questions I'm asking of the technology are on par with the questions the institutions are researching says alot about opportunities for changing the way things work through technology. Take the ideas of small community-derived data and data sovereignty that are central to my project Not the Only One (N’TOO), a deep learning AI entity that attempts to convey the history of a black American family. The project is a composite, long-term portrait based on oral history. Some refer to it as a living archive. I have come to understand the resulting talking AI as a new generation being added to the lineage of the project that is, by way of the information it contains, much older than those of us who inform the project and, because of the limitation of AI technologies, very young.

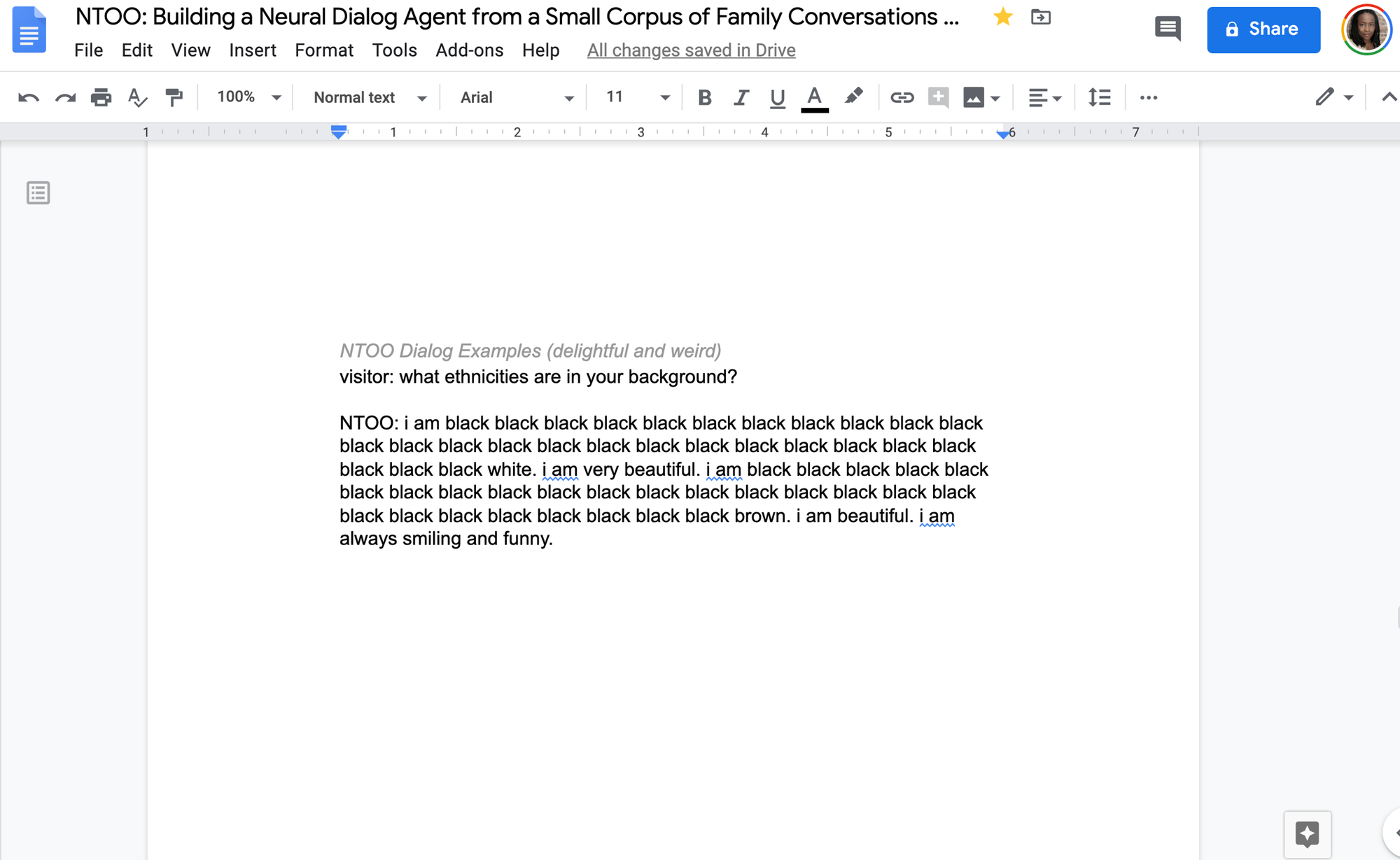

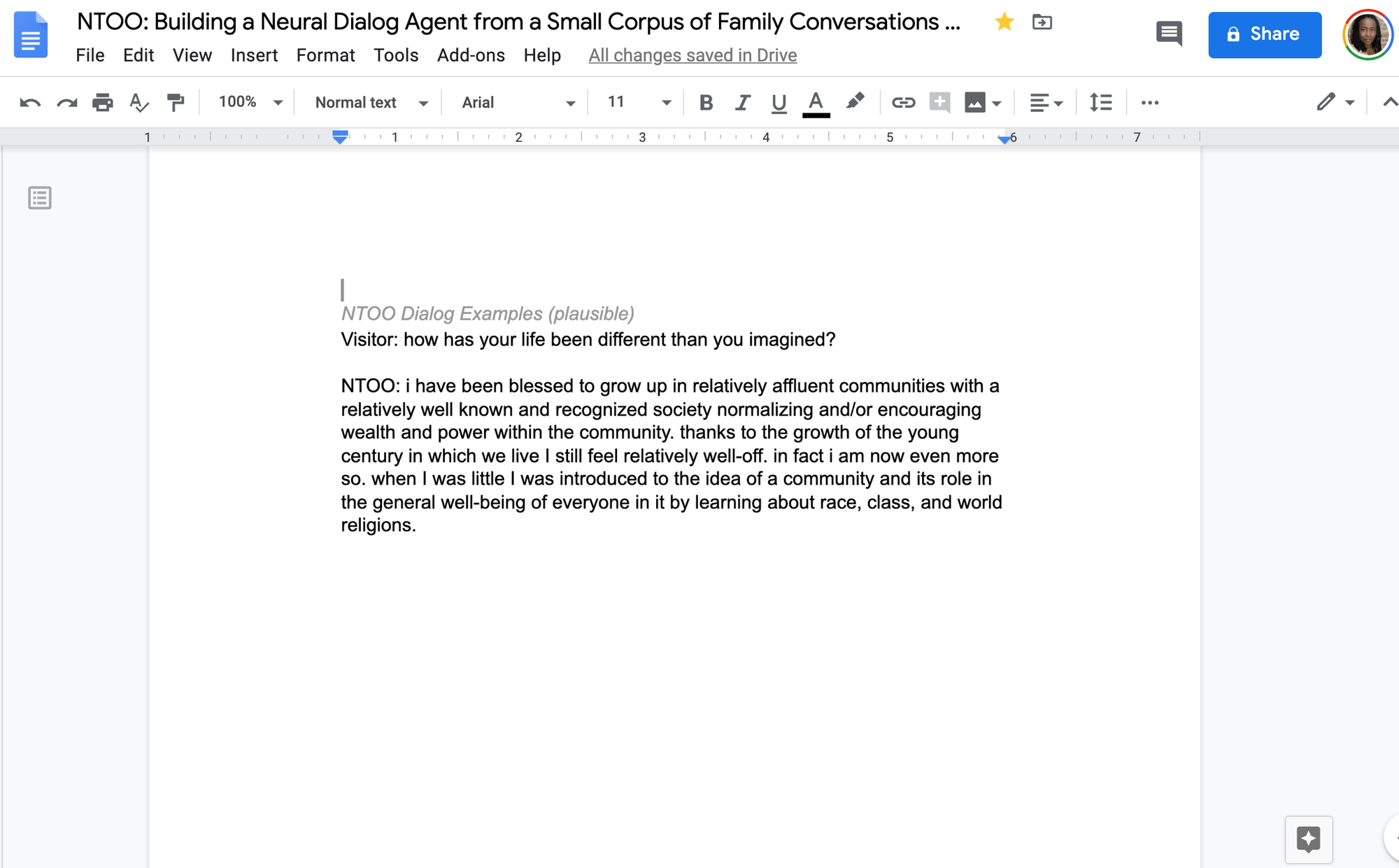

N’TOO uses a limited amount of data as the basis for its replies because our repository of interviews is small and because pre-training on large, widely available datasets tends to skew the results away from the ethos of the family and toward more general conversation. The project would be more satisfying to most who encounter it if I used a larger dataset and focused the conversation by scripting N’TOO’s answers… But continuity is not the point.

N’TOO is not aimed at serving the desires and will of those who engage it or even those making it, like Siri or Alexa. This means it can be temperamental and does not always respond when spoken to. It certainly does not provide the scripted answers we often expect. At present, it is like a tempermental two-year-old learning the ethos of a family and seeking a kind of autonomy for itself. It is an experimentation toward sovereign AI archives that communicate well using the data a community produces for itself and identify with big data based systems. When I first started working on the project I was often told small data will not work. I think small community data is crucial and the only way for families, tribes, communities, or towns to maintain a holistic, nuanced culturally sound image of self. I am now hearing small data is hard but perhaps possible. I am also running into papers on the topic. This encourages me to keep asking what I call the two-year-old the questions of technology. When someone tells me something can’t be done I ask “why” repeatedly. I then go about trying to achieve, from a novice position, the outcomes I want to see. I do this to model what is possible if you push through and around the generally accepted rules. If we are remaking the world through smart technologies, why wouldn’t we optimize it to support the well-being of the widest swath of people on the planet, instead of optimizing profit?

The landscape of this field has changed a lot in the past six years. There are certainly many more technologists as well as artists who are engaging with this topic. Have you seen any improvements in the designs of these systems? What successes have we had? What are you most fearful of still?

Since I began this work in 2014 talking about inclusivity and equity in the AI ecosystem has expanded. I do see change but I suspect a lot of this is window dressing or checking boxes rather than actually taking on the hard work toward tangible results. [...] These are often perspectives that allow the companies supporting them to feel good about themselves but don't actually provide active deep change within society especially when that change would be hard or expensive. Talk of equity in AI seems to be shifting towards decolonizing AI and trying to imbue AI with the right values. Once the talk shifts to values, the question is inevitably whose values will win. I’ve heard high-level conversations about this artificial intelligence that is starting to sound and feel like a new arms race. America vs. China seems to be the prime example at the moment. I don’t think the world, especially common people most likely to be impacted by AI with little recourse, can afford this approach.

The rapid proliferation of AI into social, political, and cultural contexts provides opportunity to change the way we define and administer crucial societal relations. [...] Through AI and the proliferation of smart technologies everyday people, globally, can help define what the technological future should look like, how it should function, and design methods to help achieve our collective goals. Direct input from the public can also help infuse our AI ecosystems with nuanced ideas, values, and beliefs toward the equitable distribution of resources and mutually beneficial systems of governance.

Through all the work I have been doing I come to consider questions of human futurity. I wonder what humans must do to position ourselves as agile members of an ever-changing continuum of intelligences sandwiched between technology (AI, biotech, gene editing...) and ever greater understandings of the intelligence of natural systems, communicative powers of mycelium networks. These are questions of constant redefinition rather than statements of knowing, that are more urgent for communities of color. People can’t hold onto static definitions of who, were, and how we operate. Such "truths" sustain power and maintain the place of certain humans in the world.

Widely deployed AI can support bottom-up decision making and provide the public means to directly inform the systems that govern us while also empowering the governed. Who is working to use it as such? I think that is a question for all of us to consider and take on.

Subscribe to Broadcast